Meta CEO Mark Zuckerberg has recently announced that the U.S. technology company will introduce premium subscription options for its newly launched AI assistant, the Meta AI app. During an interview with Stratechery, the 40-year-old outlined plans to enhance Meta’s AI features throughout its entire ecosystem to create a richer user experience.

With the launch of its AI assistant, Meta is entering a competitive landscape alongside Google’s Gemini, OpenAI’s ChatGPT, and Anthropic’s Claude, which are already established leaders in the field.

The Zuckerberg Perspective

“Our priority this year is… to establish Meta AI as the top personal AI, focusing on customization, voice interactions, and entertainment,” the Meta CEO stated.

“I anticipate that as AI boosts productivity in the economy, individuals will dedicate more time to entertainment and culture, thus presenting an even greater opportunity to develop more engaging experiences across all applications.”

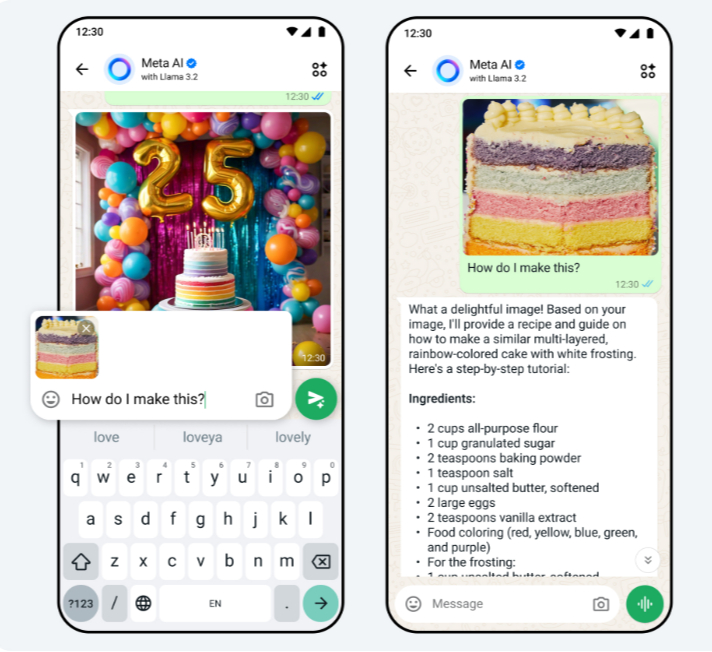

The Meta AI app, which launched in April, adds to an extensive social media suite that includes WhatsApp, Instagram, Facebook, and Messenger. Currently, it is available for free on both iOS and Android platforms. It features a Discover feed that lets users showcase how they utilize AI to achieve outcomes. This component also indicates a foundation for providing users with product suggestions or advertisements.

“I believe there will be a significant opportunity to present product recommendations or advertisements as well as to offer a premium service for those looking to unlock more computing power for enhanced functionality or intelligence,” Zuckerberg noted during the company’s earnings call. However, he was vague about the exact timing for the rollout of the subscription levels.

News for Meta Advertisers

Zuckerberg also emphasized Meta’s dedication to improving the advertising business by leveraging AI.

The Harvard dropout confirmed that AI advancements have enabled Meta to better target audiences through broader options, encouraging advertisers to move away from conventional targeting parameters in the ads manager dashboard.

“Over the past 5 to 10 years, we’ve effectively dissuaded businesses from narrowing their targeting. Previously, businesses would approach us with requests to reach specific demographics, and we’d respond, ‘Okay, look, you can propose…'” he explained.

“If they genuinely wish to narrow it down, we offer that as an alternative. But fundamentally, we believe at this stage that we are more adept at identifying the individuals who will connect with your product than you are. So, there’s that aspect.”

Zuckerberg further asserted that Meta intends to gain comprehensive control over the creative aspects of advertising to achieve optimal outcomes. This strategy, referred to as “infinite creative,” allows its AI to perpetually generate and refine advertisement content, potentially transforming the conventional advertising landscape completely.

Implications for Users and Advertisers

The monetization of the Meta AI app should not come as a surprise to users, considering that its competitors have already introduced paid subscription models for their services. At this point, it’s challenging to discern how Meta’s AI assistant could stand out from the alternatives available in the market—particularly for those who utilize AI for general purposes in a limited manner.

For advertisers, Zuckerberg’s bold vision of automating the advertisement process poses significant risks for large agencies and brands. While businesses will be able to outline their objectives, they will have to depend entirely on Meta’s AI to generate the results they desire. This approach could disrupt the entire advertising ecosystem, leading to increasing advertising costs.

What Features Are Available in the Standalone Meta AI App?

The app provides users with access to image creation, image alteration, and a voice feature that allows interaction while using other applications on the device. The AI chatbot can respond to inquiries using internet resources and tap into information users have shared on Meta platforms like their Facebook profiles or the types of content they engage with on the social media application. Those who connect their Facebook and Instagram profiles to their account center can enjoy a more tailored experience when engaging with the Meta AI app. Users wearing Ray-Ban Meta glasses will be able to interact with Meta AI through both the glasses and the app, allowing them to initiate conversations via the eyewear and continue them through the app. Additionally, the Meta AI app offers a voice assistant demonstration that provides more conversational replies, although it does not have direct internet access or real-time information.

Tangent

Meta’s stock increased by just over 1% by 2 p.m. EDT ($555.30), continuing its upward trend since hitting its lowest point this year on April 21. The tech giant’s shares have dropped by 7.3% in 2025.

What Other Tech Companies Have Standalone AI Apps?

OpenAI (ChatGPT), Google (Gemini), Microsoft (Copilot), and Anthropic (Claude) all offer AI applications. In January, Elon Musk’s xAI released a standalone application for its Grok chatbot. Initially, like Meta’s AI, which was confined to the company’s social media platforms, Grok was also restricted to X, previously known as Twitter.

Crucial Quote

“With a user base that spans billions, Meta possesses access to vast and varied data, allowing it to train highly optimized models on a unique data set,” said Kyle Hill, chief technology officer at business technology firm ANS, adding, “Meta has the potential to distinguish itself, but it must identify its key differentiator.”

Key Background

Although Meta is late in releasing its standalone app compared to its rivals, it has integrated its AI assistant into Facebook, Instagram, Messenger, and WhatsApp since 2023, which has enabled its AI models to be trained on more content. As of December, Meta’s AI assistant had nearly 600 million monthly users. In contrast, one of Meta’s competitors, OpenAI, reported to CNBC in February that it had 400 million weekly active users. While OpenAI lacks the advantage of embedding its AI into social media platforms like Meta, it has secured unprecedented funding in its ambition to lead the AI sector. In March, OpenAI completed a funding round of $40 billion, which valued the firm at $300 billion and marked the largest private tech funding round in history. Meanwhile, xAI’s Grok is supported by Musk, the world’s wealthiest individual, along with the company’s supercomputer facility in Memphis, Tennessee, which is capable of training AI models at incredible speeds.

The parent company of Facebook, Meta, is experimenting with its first internally developed chip aimed at training artificial intelligence systems, marking a significant step as it seeks to create more of its own custom silicon and lessen dependence on external suppliers such as Nvidia, according to two sources who spoke to Reuters.

The largest social media platform globally has initiated a limited rollout of the chip and intends to increase production for broader use if initial tests are successful, the sources indicated.

This effort to create in-house chips is part of Meta’s long-term strategy to decrease its substantial infrastructure costs as the company makes costly investments in AI tools to stimulate growth.

Meta, which also owns Instagram and WhatsApp, has projected total expenses for 2025 to be between $114 billion and $119 billion, which includes up to $65 billion in capital expenditures primarily driven by investments in AI infrastructure.

One source mentioned that Meta’s new training chip is a dedicated accelerator, specifically designed for AI-related tasks, making it potentially more energy-efficient compared to the graphics processing units (GPUs) typically utilized for AI workloads.

Meta is collaborating with TSMC, a chip manufacturer based in Taiwan, to produce the chip, according to this person.

The test deployment commenced following Meta’s completion of its first “tape-out” of the chip, a crucial achievement in the chip development process that entails sending an initial design to a chip fabrication facility, as clarified by the other source.

Conducting a typical tape-out can incur costs in the tens of millions of dollars and take about three to six months to finalize, with no assurances of success. If unsuccessful, Meta would need to identify the issue and repeat the tape-out process.

Both Meta and TSMC chose not to offer comments on the matter.

The chip is part of the company’s Meta Training and Inference Accelerator (MTIA) series. This program has experienced a rocky start over the years and previously abandoned a chip at a similar development stage.

However, last year, Meta began using an MTIA chip for inference, which involves operating an AI system as users engage with it, particularly for the recommendation systems that dictate the content displayed in Facebook and Instagram news feeds.

Meta executives have indicated a desire to utilize their own chips for training purposes by 2026, focusing on the compute-heavy process of providing AI systems with extensive data to “educate” them on how to function.

Similar to the inference chip, the objective for the training chip is to initially concentrate on recommendation systems and eventually expand its use to generative AI products such as chatbot Meta AI, as noted by the executives.

During a recent conference, Meta’s Chief Product Officer Chris Cox remarked that they are exploring methods for training recommender systems and later how to integrate training and inference for generative AI.

Cox characterized Meta’s chip development journey as “kind of a walk, crawl, run situation” up until now, but stated that executives view the success of the first-generation inference chip for recommendations as significant.

In the past, Meta discontinued an internal custom inference chip after it underperformed in a limited test rollout comparable to the current endeavor for the training chip, leading the company to instead place substantial orders for Nvidia GPUs in 2022.

Since then, the social media giant has remained one of Nvidia’s major clients, accumulating a vast array of GPUs to train its models, including those for its recommendation and advertising systems, as well as its Llama foundation model series. These units also facilitate inference for the more than 3 billion individuals who access its applications daily.

The worth of these GPUs has come into question this year as AI researchers have voiced skepticism regarding the potential for further advancements by simply “scaling up” large language models through additional data and computing resources.

Such concerns were underscored by the late-January introduction of cost-effective models from the Chinese startup DeepSeek, which enhance computational efficiency by emphasizing inference more than most existing models.

This trend led to a global downturn in AI stocks, with Nvidia’s shares losing up to a fifth of their value at one point. They later recovered much of that loss as investors bet that the company’s chips will continue to be the industry benchmark for training and inference, although share prices have declined again amid broader market trade worries.